Unicorns and Rainbows: The Reality of Implementing AI in a Corporate

The AI Bubble: Hype, herd mentality, and harsh realities

Unicorns and Rainbows. Is it a metaphor? Is it a reality? Maybe both. Think of an unicorn dancing on top of a radiant rainbow. But, in fact, what does it mean?

Humanity has always been drawn to utopia — a perfect, idealized future where all problems are solved. Believing that the world is steadily marching toward this vision is tempting. In the AI landscape, the unicorn (you have noticed the 5th leg, right?) represents the elevated promises, wild imagination, and relentless hype that paint a picture of transformative, almost magical technology.

AI trends move at lightning speed, leaving the real engineers behind to fix the mess. Learn how to not to run after trends and architect real solutions.

The rainbow, however, represents the real world: entire potential but riddled with imperfections, inconsistencies, and systemic barriers.

Just like the stock market, AI has its declines and flows. Everything might seem to skyrocket, but a slight shift — technical debt, regulatory burdens, or enterprise realities — can send it crashing back to earth. The question is not whether AI is a transformative force (there is no doubt it is!) but whether we’re being realistic about its trajectory.

This article will discuss the reality of using AI in the enterprise environment, address technical debt, bridge knowledge gaps, and understand the herd effect that fuels the AI bubble. We aim to offer a realistic roadmap for businesses navigating the complex AI landscape by critically analyzing these factors.

1. The AI bubble

We have been in Data & AI for over 10 years. The AI bubble has never been so big. We have AI everywhere on our laptops, phones, and websites. The CEOs of Nvidia, Microsoft, Meta, and OpenAI are spreading a lot of news about revolutionary AI technology, how AI agents will replace humans, how we will reach AGI soon, and how we will have AI everywhere. We live in an AI bubble, and even though the technology is accurate, it is nontrivial to apply it to actual use cases and drive business value than advertised.

The technological advancements in the AI field are significant, and the value AI generates is real. However, there are still many gaps that people who try to build AI products see clearly. Two factors contribute to the AI bubble: knowledge and the herd effect. Somehow, they are tangential but different.

2. The Role of Knowledge Gaps

The gap between AI insiders and the general public is one of the hinds of the AI bubble. The saying “Knowledge is power” remains valid for AI within its development and implementation context.

People who are deeply invested in the development of AI are fully aware of the nuances, challenges, and limitations that come with the implementation of AI-based solutions.

On the other hand, AI outsiders are constantly awe-struck by the marketing terms associated with AI which presents an entirely different world of possibilities. This knowledge gap enables misconceptions to spread at an alarming rate, therefore making the hype of AI take precedence over the reality of what AI systems can offer.

3. Herd Effect: Fear of Missing Out (FOMO)

Another significant factor driving the AI hype is the herd effect or the Fear of Missing Out (FOMO).

As more companies invest in AI and tout their successes, others feel compelled to follow suit, fearing they’ll fall behind if they don’t adopt AI technologies. This rush often leads to deploying AI solutions without a thorough understanding of their applicability or potential ROI, further inflating the AI bubble. The result is a market saturated with AI buzzwords and solutions that may not deliver the promised transformative impact.

AI models (under AI models, we understand foundation models) are used everywhere, where a standard ML model should be used instead. This adds complexity and decreases reliability.

4. Back to basics

Most companies can not reliably bring standard machine learning models to production and lack monitoring practices.

Despite what many people think, workflows that include AI models are, on average, more complex to bring into production and monitor — even if you take the simplest scenario without RAG or finetuning involved — just call a 3rd party API.

In too many cases, we seem to have forgotten the basic principles of machine learning and blindly rely on what that API outputs. This is the danger of AI hype: AI has become accessible to everyone, and many software engineers treat it as just another API call.

What could go wrong? The data model has not changed, the code has not changed (and neither has the environment where it gets executed), and the version of the API has not changed. But this is the beauty of machine learning: even if everything in your control has not changed, the model can start performing poorly unexpectedly because data distribution has changed.

This does not just happen with standard machine learning models, it also happens with AI models — we just have less means to impact that behavior, and prompt fine tuning becomes an essential part of the process.

5. Real-world, 2025

Experts say that 2025 will be the year of AI agents. But is it really true?

While the AI hype machine continues to boom, real-world adoption tells a different story. The promise of autonomous AI agents seamlessly operating across enterprises remains largely aspirational. The reality? AI in enterprise is still a work in progress — complex, expensive, and often misaligned with actual business needs.

Take BBVA, the Spanish bank that went all in on OpenAI’s technology. They deployed over 2,900 AI models to enhance productivity, yet integrating them into their existing systems turned out to be a logistical nightmare. AI doesn’t operate in a vacuum; it needs to connect with legacy infrastructure, existing workflows, and strict regulatory requirements. And that’s where reality bites — scaling AI across an enterprise is exponentially harder than rolling out a chatbot.

The UK government’s attempt to integrate AI into its welfare system faced significant limitations. At least six AI prototypes, designed to enhance staff training, improve job center services, and streamline disability benefit processing, were discontinued due to issues in scalability, reliability, and insufficient testing. Officials acknowledged several “frustrations and false starts,” highlighting the complexities involved in deploying AI within public services.

A study highlighted several obstacles in developing and deploying AI agents within enterprises. Security concerns were identified as a top challenge by leadership (53%) and practitioners (62%). Other significant challenges included data governance, performance issues, and integration complexity. These findings underscore the multifaceted difficulties organizations face in implementing AI agents effectively.

Reflecting on these examples, it’s evident that the widespread adoption of AI agents in enterprise settings faces significant limitations. While 2025 may usher in extensive research, proofs of concept (POCs), and minimum viable products (MVPs), the path to full-scale integration remains complex.

6. AI in a corporate environment

Big companies operate under strict rules, structured workflows, and a constant focus on ROI. Unlike agile startups that can adapt on the fly, large organizations have to deal with complex approval processes, compliance checks, and risk management. All this makes adopting AI a slower process, and the idea of rapid transformation often feels more like a distant dream than something achievable.

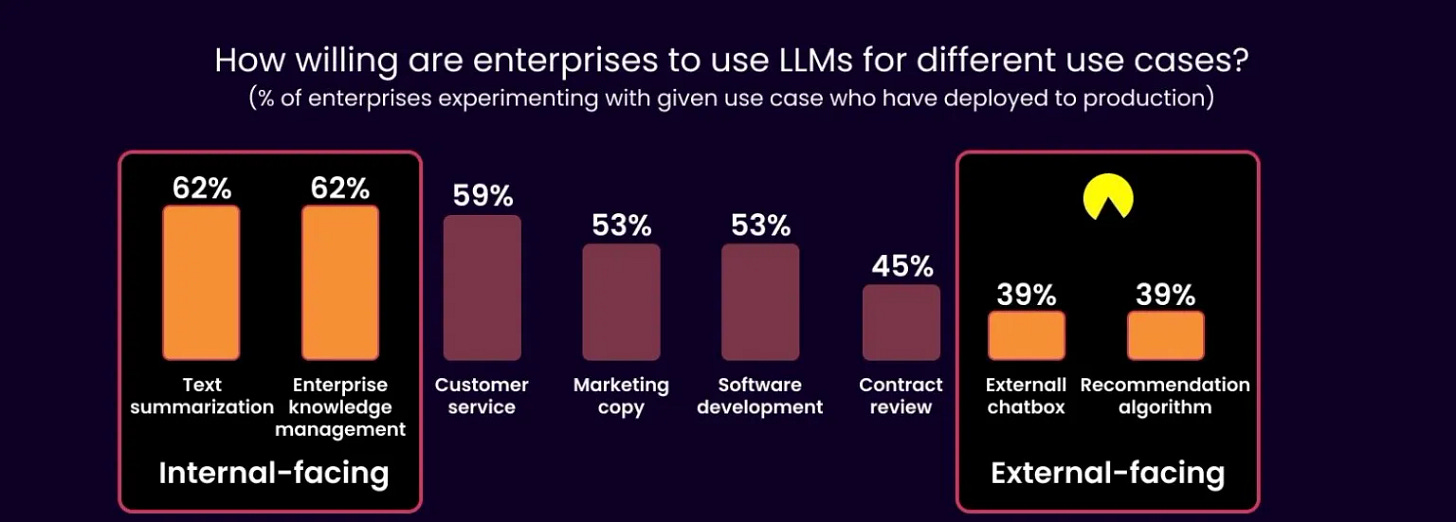

Chip Huyen references the most common LLM applications in her AI engineering book. Enterprises are risk-averse and prefer to deploy internal-facing applications first. From what we have seen so far, even though there is initial support from the leadership to deploy such applications, not enough funding goes to those projects (and unlikely will) as they do not generate direct business value. We are not saying there is no value — there is, but it is challenging to convince stakeholders.

In enterprises, the most common use cases with direct business generation are related to customer service (forwarding customers to the right agents/ processes) and reviewing contracts. These use cases have been there for a while, and have historically been NLP-heavy, and AI models helped to improve these projects.

Some companies have tried to use LLMs for recommendations and chatbots, and the world has seen enough failures. Here are some examples:

DPD’s customer-facing chatbot, “Ruby,” was designed to assist customers with their inquiries. However, due to insufficient safeguards, a user was able to provoke the bot into swearing and composing a poem criticizing the company itself. This incidentunderscores the importance of implementing strict content moderation protocols and regularly updating AI systems to prevent such occurrences.

Similarly, Pak’nSave’s AI meal planner app, intended to provide innovative recipe suggestions, malfunctioned and recommended a combination of ingredients that would produce chlorine gas, labeling it as an “aromatic water mix.” This highlights the critical need for rigorous testing and validation of AI outputs, especially in applications directly impacting consumer health and safety.

It feels like not everyone has learned from it, and we regularly see companies launching AI applications without clear business value with poor guardrails, mainly for “marketing purposes”. Let’s hope it will not turn out to be bad marketing, as users will try to make the app do things it is not supposed to do “just for fun”.

There are exceptions. Some companies created nice LLM-powered recommendations, for example, Zalando. It has well-implemented guardrails and is useful for the customers (it helps to find items that are otherwise hard to find via search). In October 2024, Zalando expanded its AI-powered assistant to all 25 markets, supporting local languages. This expansion aims to provide customers with personalized fashion advice and insights into emerging local trends, thereby enhancing the shopping experience.

7. Areas of attention & conclusions

There is great potential to leverage AI in a corporate setting. However, to hope for enterprise adoption, we must consider security gaps, controlled environments, transparency and traceability, and a way to monitor and evaluate AI systems.

In enterprise ecosystems, AI systems need large volumes of data, including personal and proprietary information. Their role is to enhance workflows and boost efficiency, but they need access to critical systems, which can be considered a security risk. Organizations must focus on preventing unapproved access to data, breaches, and compliance issues.

Threat actors can deploy malware that mimics AI behavior to breach networks, skew decisions, or steal secrets. AI agents act autonomously, making them harder to detect and control. This creates a major challenge: real-time oversight of AI systems.

Monitoring is a persistent issue. Few companies have proper systems in place, and AI’s complexity makes it even harder. Owners must fully understand every decision their AI makes

AI’s transformative potential is undeniable, but the path from hype to reality is complex and challenging. Rather than chasing unicorns and rainbows, organizations must take a grounded, strategic approach — one that prioritizes real business needs, robust security frameworks, and a deep understanding of AI’s limitations. The road ahead is uncertain, but one thing is clear: the way we answer these questions will determine whether AI becomes a lasting force for good or just another passing bubble.

What do you think — are we ready?

Nice article!

There is an AI bubble more or less, people who work with ML stuff - see it.

We just keep throwing cash at a technology that inherently won't fix itself due to its inner workings (talking about Agents, LLMs here being auto-regressive, and merely high-quality token classifiers).

There's no discussion that Agentic AI, LLM based systems don't work - they do, but not at a flip of a switch thus grounded decisions are required before adoption.

This survey from Vention is also a good read on AI Adoption Rate:

https://ventionteams.com/solutions/ai/adoption-statistics